I had lunch with my friend Christian the other day (we went to wagamamas, I had Ginger Chicken Udon – delicious) and he was telling me about a company who work according to what sounded very much like developer anarchy – basically everyone is allowed to go their own route, use whatever tools or languages they please, using any framework they want to, to deliver projects. This sounded like fresh lunacy, and I couldn’t get past how much of a nightmare it must be as a build/release or sysadmin guy, to make all of that come together and then look after it.

Developer Anarchy

However, it did get me thinking about the tools and frameworks that I’ve used over the years, and whether they were too restrictive. That led me on to thinking about some of the business processes I’ve worked with, and how counter-productive they could be too. So I decided to list some of these tools, processes and frameworks, and critique them with respect to how inflexible and restrictive they can be.

Maven and Ant

Rather predictably, I’ll start with Maven. Maven is both a build tool and a framework, it uses plugins to hide the complexities of the tasks it performs “behind the scenes”. Rather than explicitly coding out what you want to compile, or what unit tests you want to run, Maven says “if you put your files here, and call this plugin, I’ll take care of it”. Most of the Maven plugins are fairly configurable, but only to an extent, and you have to consult the (very hit-and-miss) documentation every time you want to do something even slightly off the beaten path. It very much relies on the “convention over configuration” principle – as long as you conform to its convention, you’ll be fine. And it’s this characteristic which inevitably makes it so restrictive. It’s testament to how difficult it is to veer from the “Maven way” that I almost always end up using the antrun task to call an ant script to do most of the non-standard Maven things that I want to do with my builds. For instance, I currently have a requirement to copy my distributable to 2 separate repositories, one with some supporting files (which need to be filtered) and one without. This isn’t a particularly weird requirement, and you can probably do it with Maven, but despite the fact that I’ve worked with Maven on a daily basis for over 3 years, I still find it much easier to just call Ant to do stuff like this, rather than write my own plugin or go trawling through Maven’s online documentation.

Dev Team Ring-Fencing

I have worked in companies where discussion and interaction between development and QA/NetOps etc have actively been discouraged. The idea behind this insanity has been to give the developers time to do their work, without being constantly interrupted by testers, release engineers etc and so on. I can just about understand how this can happen, but I can’t really understand how someone can come to the conclusion that ring-fencing a whole team and discouraging interactions with other teams is a sustainable and sensible solution. The solution should be to find out exactly WHY the QA or NetOps team need to constantly interrupt the developers. Being told that you can’t talk freely with other teams develops business silos which can be very hard to break down, and also prevents people from getting the information they require, which can seriously impact productivity.

Audits

If I had a pound and a pat on the head every time I heard the phrase “we can’t do that, it would never get past the auditors”, by now I’d be a rich man with a flat head. Working in build and release engineering, I quite often get caught up with security and audit issues when it comes to doing deployments – what account should be used to do deployments to production? How often should passwords be changed? How do we pass the passwords? Are they encrypted? What level of access should release engineers have to the codebase? All of these questions come up at one point or another. In my experience, developers, sysadmins, managers and the like, will always come back with “you can’t do that, it’ll never get past the auditors” whenever you propose a new or slightly radical way of doing things, it’s like their safety net, and I think it’s just their way of saying “we don’t like that, it’s different, different is bad”. My suggestion is to speak with the auditors and challenge what you’ve been told. If that’s not possible, try to find out exactly what rule you’re breaking and then find out what the guidelines for compliance are on the rule in question – google is remarkably good for this, so are forums and meetups. In my experience, most of the so called compliance rules are not nearly as draconian or restrictive as many of my own colleagues would have me believe.

Meetings

Oh my god. So many meetings. I now hate pretty much all meetings. Prior to working at Caplin Systems I think my job was to attend meetings all day. I can’t really remember, I can’t actually remember a time before meetings.

The worst meetings of all are the “weekly status meetings”. I don’t know if these are widely popular but if they are, and you have to attend them, then you have my sympathies. They amount to a lot of people talking about stuff that’s already happened. Quite a lot of it has nothing to do with you, and absolutely all of it could be communicated more efficiently without needing a meeting. Also, someone takes notes. Taking notes in a meeting about stuff that’s already happened so that they can tell everyone else about what happened in a meeting about stuff that’s already happened. The pain is still raw. Don’t do this, it’s stupid. Do demos instead, they’re more interesting and we actually get to SEE stuff, rather than just hear someone droning on about stuff they’ve already done. Meetings are not productive, and they stifle my creativity by making me want to go to sleep.

MS Project

“You have to work on this today, and it has to take you eight hours, and then you have to work on a different project for 50% tomorrow, and then you have to work on bla bla bla”. No. Wrong. This is totally unrealistic. You only have to be out by 10% with your LOE (Level of Effort, or SWAG “Sophisticated Wild-Arsed Guess”) to throw the whole thing into disarray. Be sick for a day and they system is in free-fall, one project needs to shift by 1 day and suddenly you’re clashing with all these other projects and you’re assigned to work a 16 hour day. MS Project is often the tool of choice for companies who follow the Waterfall process, and it’s probably the process as much as the tool itself which causes this mess, but the tool certainly doesn’t help. I prefer to use tools that allow me to manage my time on a 2 weekly basis (like Acunote, see here), the decision of when I should work on each task is determined by me and the team I’m working with, and it has worked very successfully so far.

Source Control Systems

Aside from Visual Source Safe, I’ve never worked with a truly awful SCM. They all have really neat features and at the end of the day they perform an exceedingly important job. However, the everyday use of these tools varies from one to another, and some are more restrictive and controlling than others. For instance, Perforce, which has long been one of my favorites, only allows you to have 1 root for your client spec – this is a pain in a CI system if you can’t tell your server to swithch clientspec (or workspace). I’m currently working with Perforce coupled with Go as the Continuous Integration server, and Go forces me to check out my files to “C:\Program Files\Cruise Agent” but my build has a requirement to also sync some files to D:\. This can’t be done with Perforce because you can only have one root directory (in this case C:\).

I like SCM systems where it’s easy to create branches. I’m a big fan of personal branches, somewhere to check-in my personal work, POCs, or just incomplete bug fixes that I’d like to check-in somewhere safe before I go home. Subversion and Git make this easy, and they also allow you to merge your changes to another branch. I personally find Subversion better at this than Perforce, but that might just be me (I get confused with Perforce’s “yours” and “theirs”). SCM systems that allow for easy branching are far less restrictive, and encourage you to try something different, without having to worry about checking in to the main branch and breaking everything.

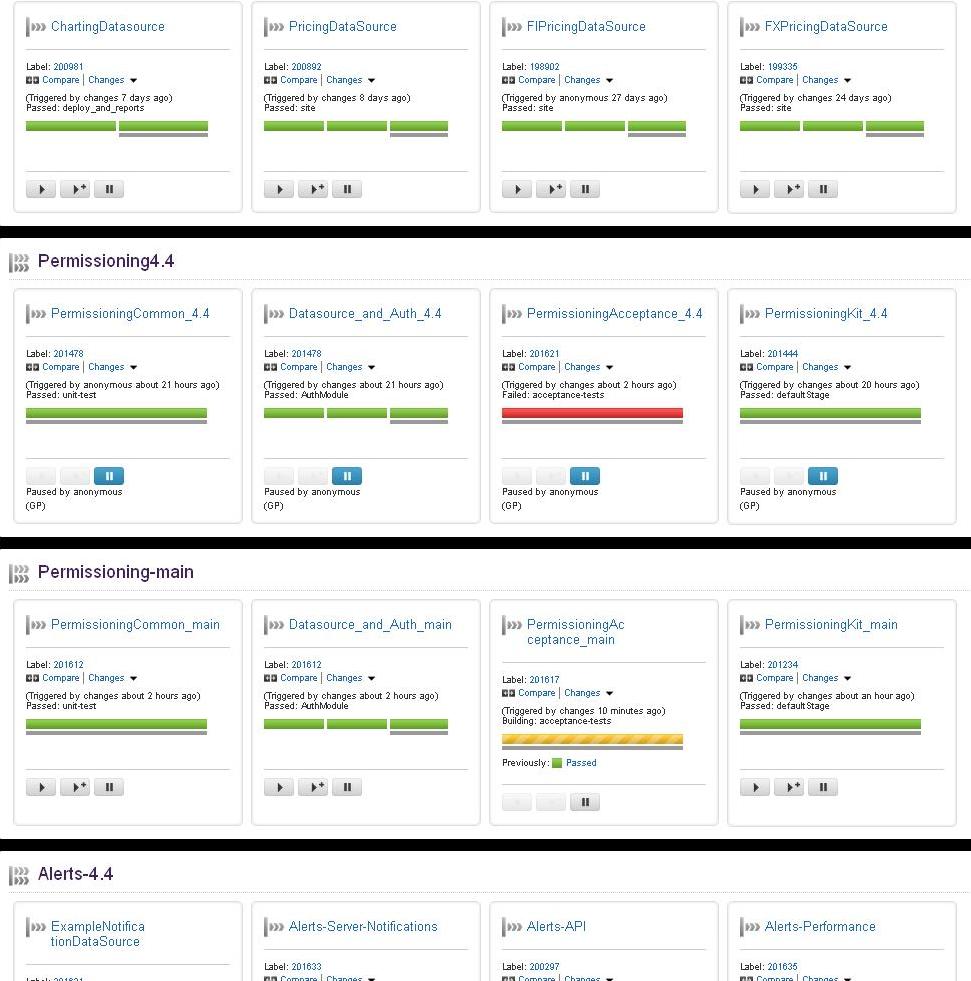

Continuous Integration Systems

C.I. systems can really get in the way of your productivity if they:

- Take ages to setup a build job – How can I be productive if I’m spending so long creating a build job, or waiting for one to be made?

- Keep reporting false-negatives – How can I be productive if I’m always looking into a failing build which isn’t really failing?

- Don’t provide a friendly interface

- Don’t provide you with the information that you need!

A good C.I. system should be a service, and a hub of information. It should be easy to copy build jobs or create new ones and it should provide all users with a single point of reference for all the build output, like test results and static analysis results. At a previous job, Pankaj Sahu, the build engineer, setup a system of automatically creating build jobs in Bamboo using Selenium and Ant, it was brilliant. Bamboo also allows you to copy jobs, which is in itself a real time saver. Jenkins also has a similar feature. Go, which is a good C.I system is slightly harder work, but it’s main advantages lay elsewhere.